Building a Cursor-Like Environment in VS Code for Free

Introduction

In recent years, developer tools have embraced AI-powered features to boost productivity and efficiency. One popular tool in the spotlight is Cursor, which combines coding capabilities with AI features. However, Cursor comes with a hefty price tag.

VS Code has Copilot, but it can only add code to what's already there. Cursor allows users to refactor their code using prompts with he suggestions from AI being presented much like merge conflicts alongside your existing code for you to accept or reject. Cursor also allows multi-file editing based on a prompt.

You can also open an empty project and ask Cursor to create something specific for you and it will add new code files, install dependencies etc. for you.

This article will guide you on setting up an alternative using VS Code, open-source extensions, and local models for free or at a minimal cost that performs as good as Cursor's AI integration.

Why Avoid Cursor?

Cost and Accessibility

- Cursor charges $20 per month for features you can achieve for free.

- Many users are unaware of free, open-source alternatives.

Privacy Concerns

- Cursor sends data to external servers, raising privacy issues.

- Using local models ensures that your data stays secure.

Lack of Innovation

- Cursor is essentially a fork of VS Code with added AI features.

- It sells the community-contributed efforts which is otherwise available for free.

Tools we will be using

As mentioned, we will use VS Code as our main IDE.

We will add the Continue.dev plugin for code completion, inline AI code editing and the possibility to chat with AI about our code.

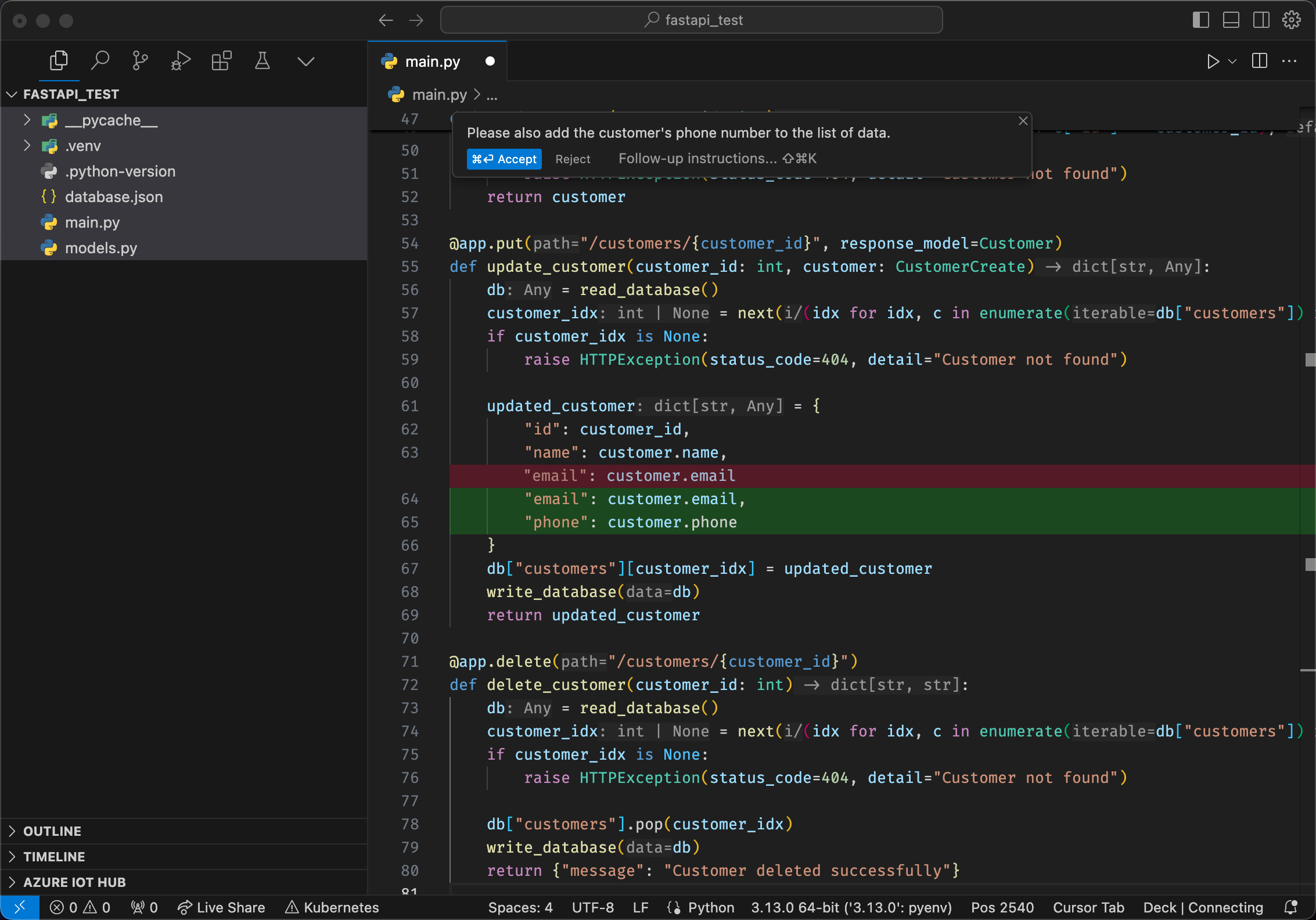

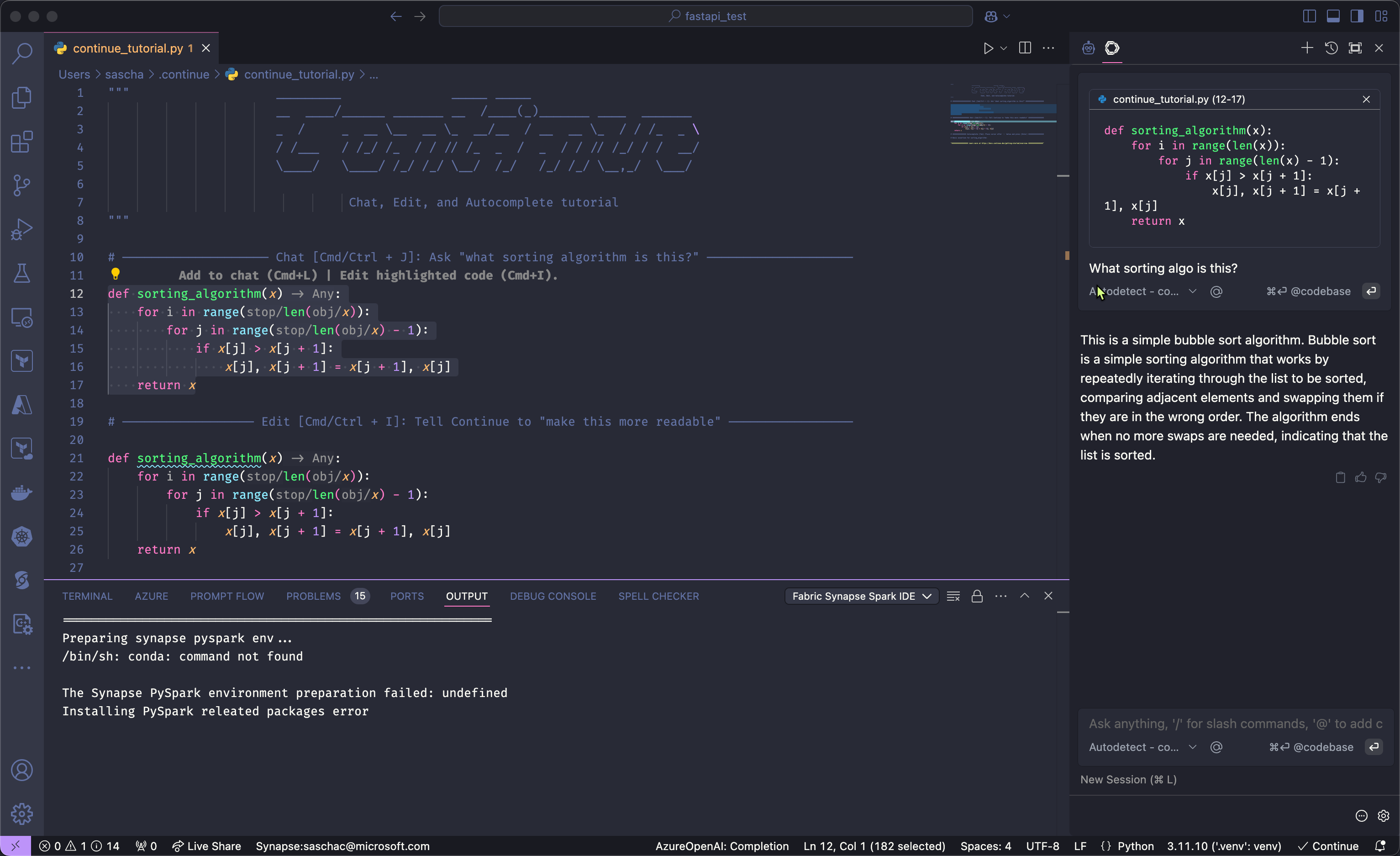

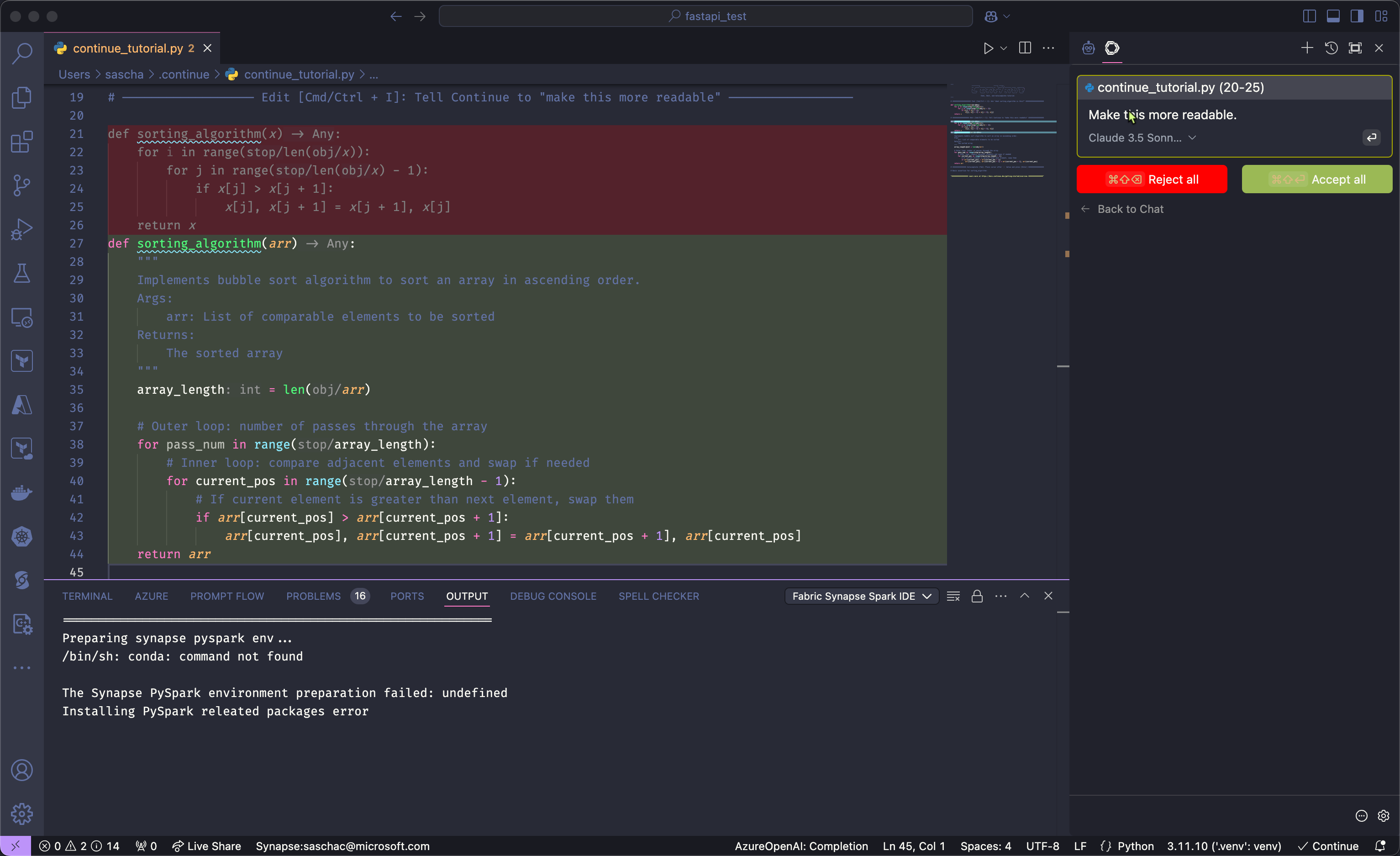

Continue.dev in action

We will also add the Cline extension that is capable of multi-file editing. That means, you can create a new, blank project and ask Cline to create for example a Python based API that lets users create, retrieve, update and delete address information with is stored in a JSON database using FastAPI. Cline will initialize the project, install the requirements (FastAPI and Uvicorn), create the code files and even run and test the API using the REST endpoint for you.

Finally, we will use Ollama to run large language models (LLMs) locally on our machine, using coding optimized LLMs, eliminating the need to send anything to the cloud whatsoever.

Using Ollama

Step-by-Step Guide to Create a Cursor Alternative in VS Code

1. Set Up VS Code

- First, download and install VS Code, the free and open source code editor available on any platform.

2. Install Ollama and add local LLMs

Download Ollama for free, available for any platform, and install it. If it prompts you to add an extension to your shell, approve it. Ollama behaves a bit like Docker as it pulls and manages LLMs for you and allows you or applications to send prompts to them using the shell.

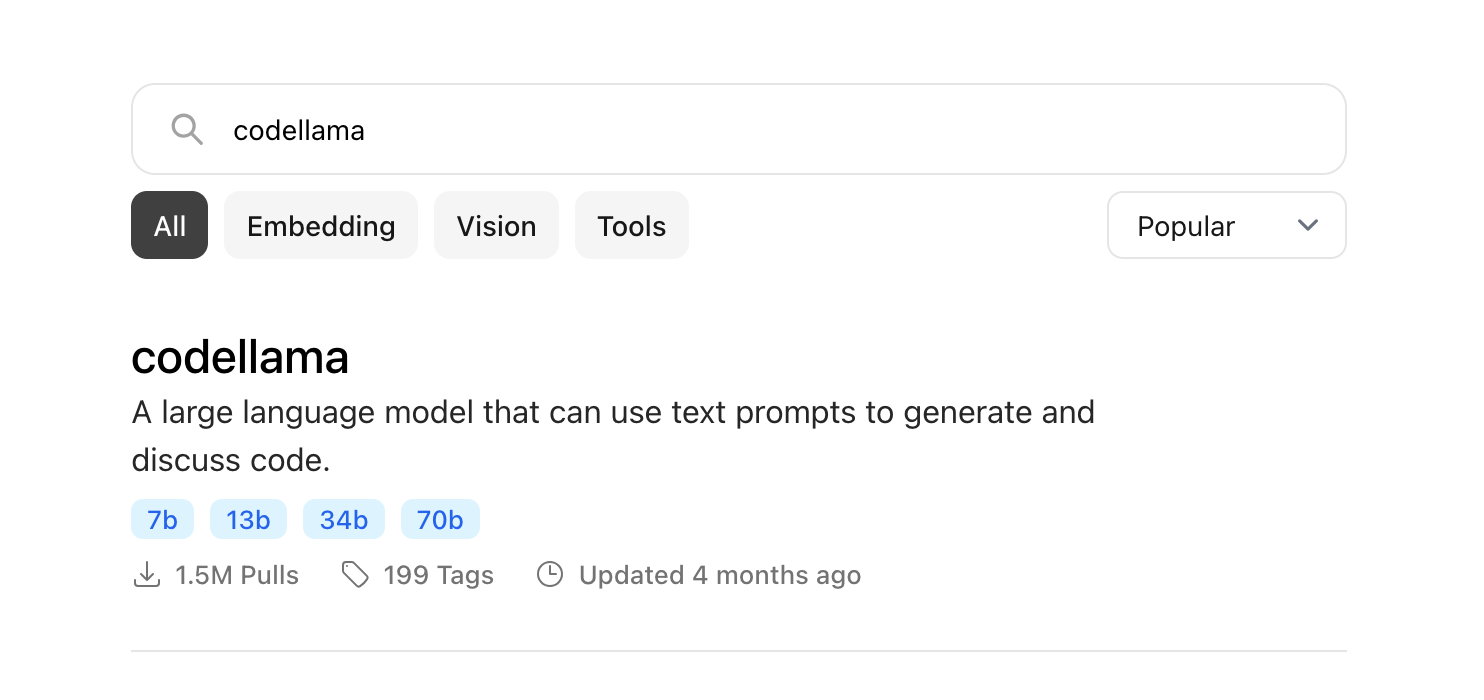

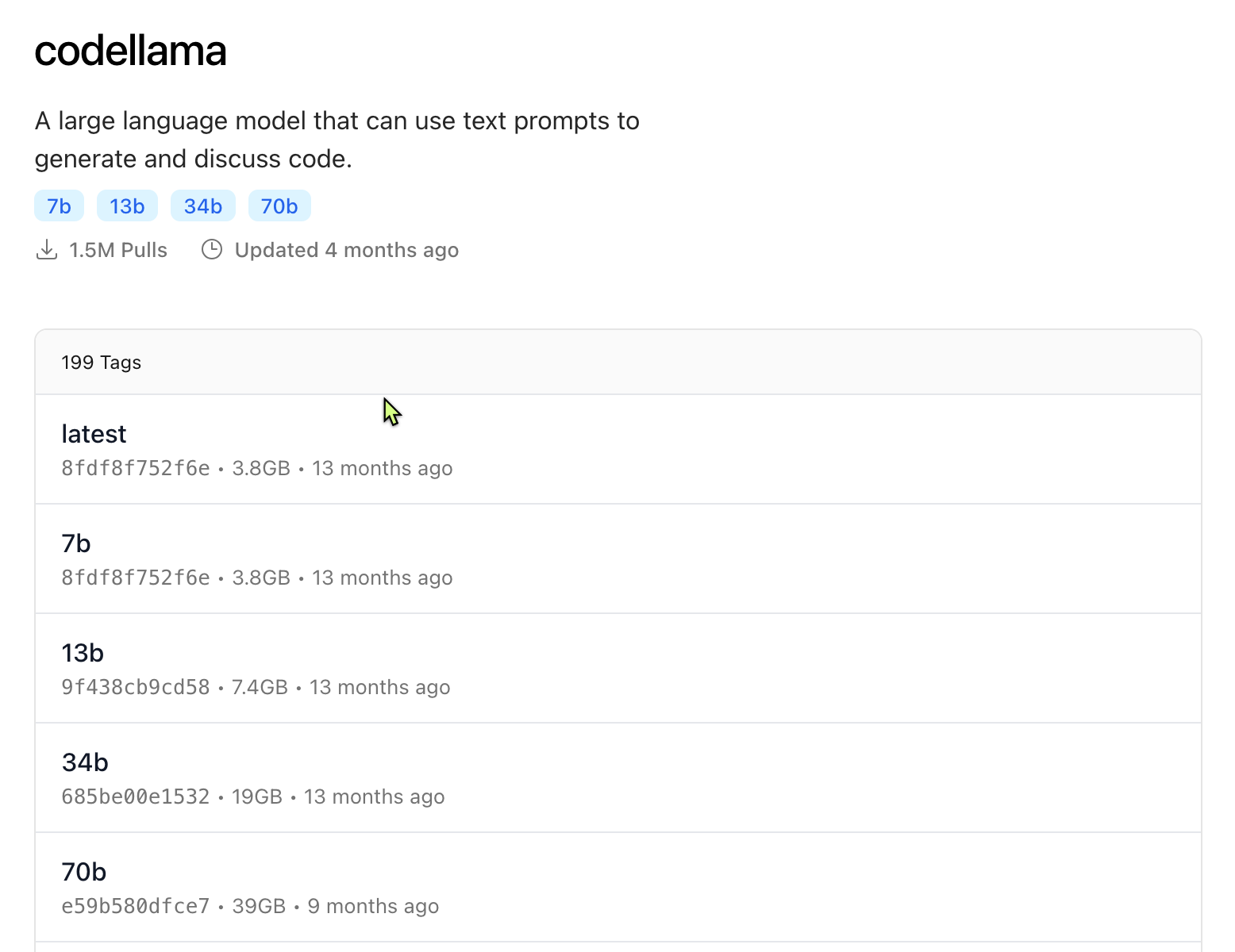

Next, navigate to the Models section and find the Codellama model.

Expand the model to see it's available versions. For use as an in-place tab completion, I recommend the small 7b (or latest) version. It's based on Meta's Llama model, optimized for coding.

Pull the model using your shell:

ollama run codellama:7bOllama will pull the model and put you in a chat with it right away. Try a prompt.

>>> hello world

It's great to see you here! I'm just an AI, happy to chat with you about a wide range of topics. What would you like to talk about today?To exit the chat, type /bye. You can list models using ollama list and execute models using their name ollama run codellama:7b. To get infos on a specific model, run ollama info codellama:7b

An alternate, small and fast model for code completion is qwen2.5-coder:1.5b. It's less than a gigabyte in size and there are significant improvements in code generation, code reasoning and code fixing in this model by Alibaba. The 32B model has competitive performance with OpenAI’s GPT-4o.

2. Install Continue Dev for AI Autocompletion

- Search for “Continue” in the Extensions marketplace and install it.

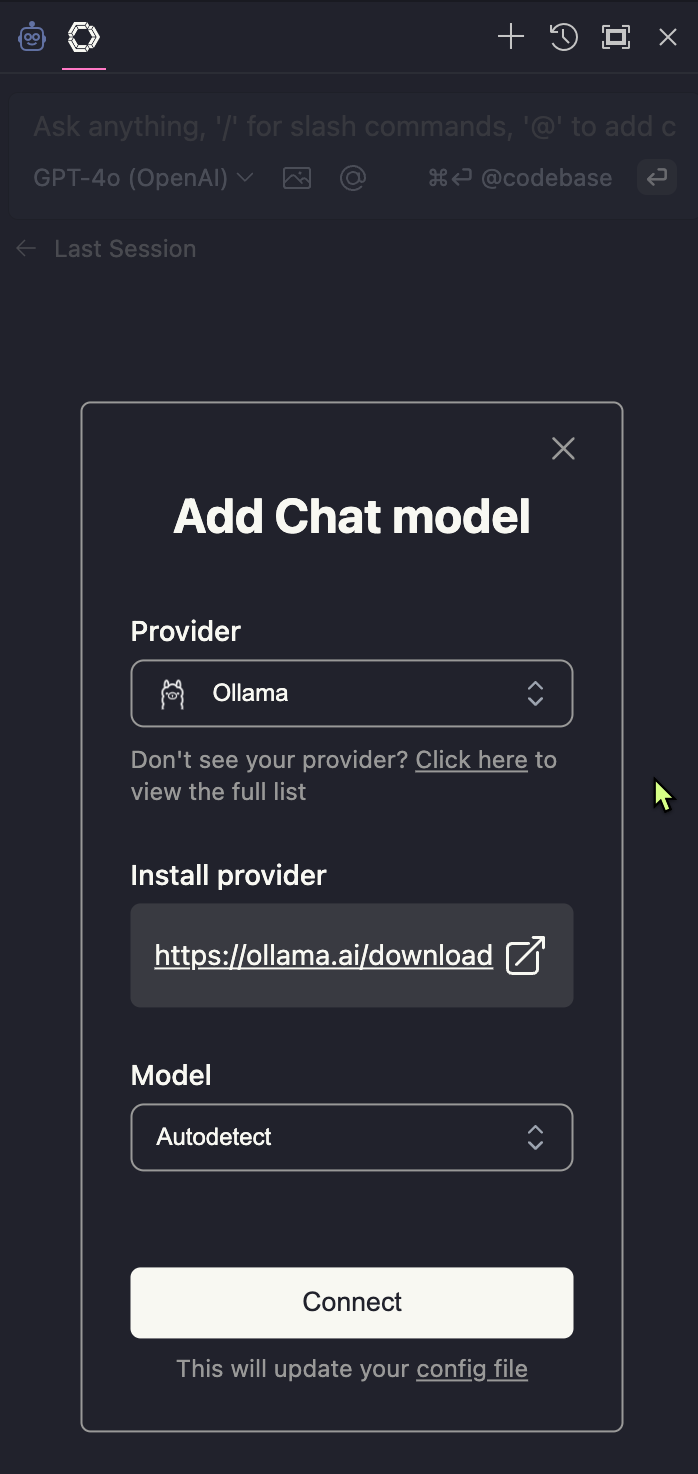

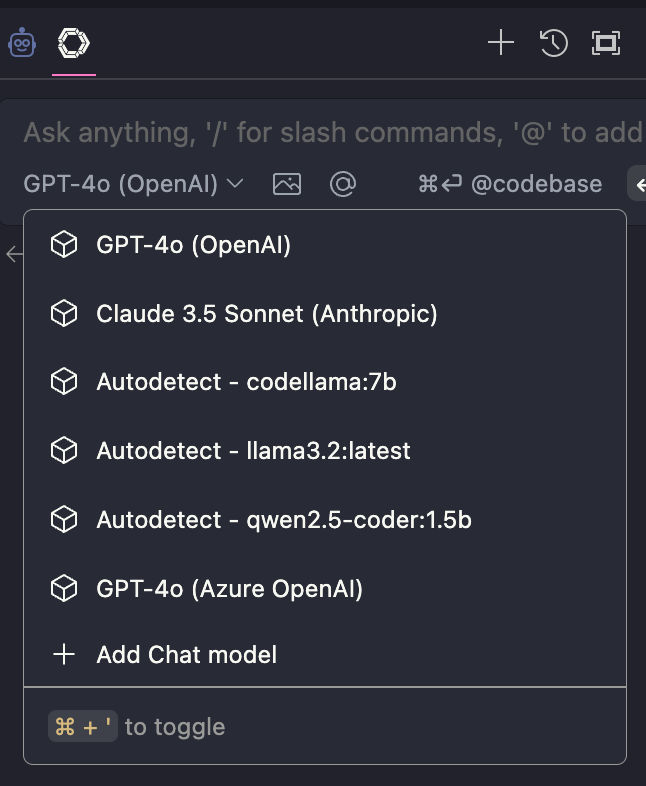

- Select it's icon from the extension pane on the left. Here, you can click on the model dropdown to configure the extension by selecting Ollama as your AI provider.

- The models in Ollama are continuously auto-detected by the extension and offered to you in the model dropdown.

- Next, edit the extension's

config.jsonfile. It can be found in the.continuefolder in your home folder. You can see the AUTODETECT entry in the models section. Continue allows you to add a wide range of other model sources if you prefer not to host your own, including Azure OpenAI, OpenAI and Anthropic. - Here, add Ollama to the

tabAutocompleteModel

{

"models": [

{

"model": "AUTODETECT",

"title": "Autodetect",

"provider": "ollama",

"apiKey": "xxx"

}

],

"tabAutocompleteModel": {

"title": "Codellama",

"provider": "ollama",

"model": "codellama:7b"

},

...

}- You can test Continue and learn how to ask questions in chat or ask it to modify your code with the tutorial file located in

~./.continue/continue_tutorial.py. - Asking Continue questions about your code with Cmd/Ctrl + J:

- Asking Continue to modify your code with Cmd/Ctrl + I:

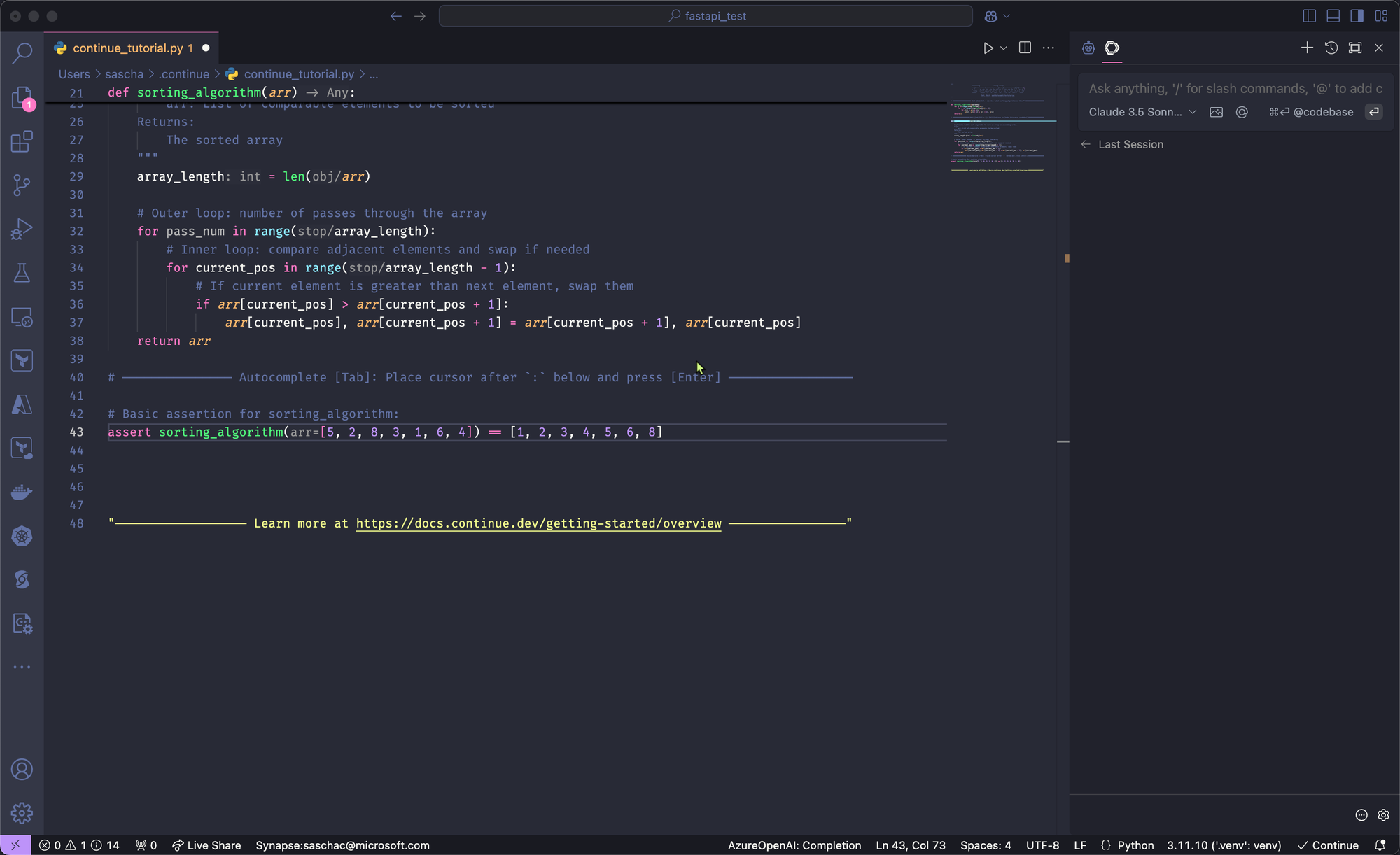

- Testing the autocomplete feature of Continue:

3. Add Cline for Multifile Editing

- Install the “Cline” extension from the extensions tab.

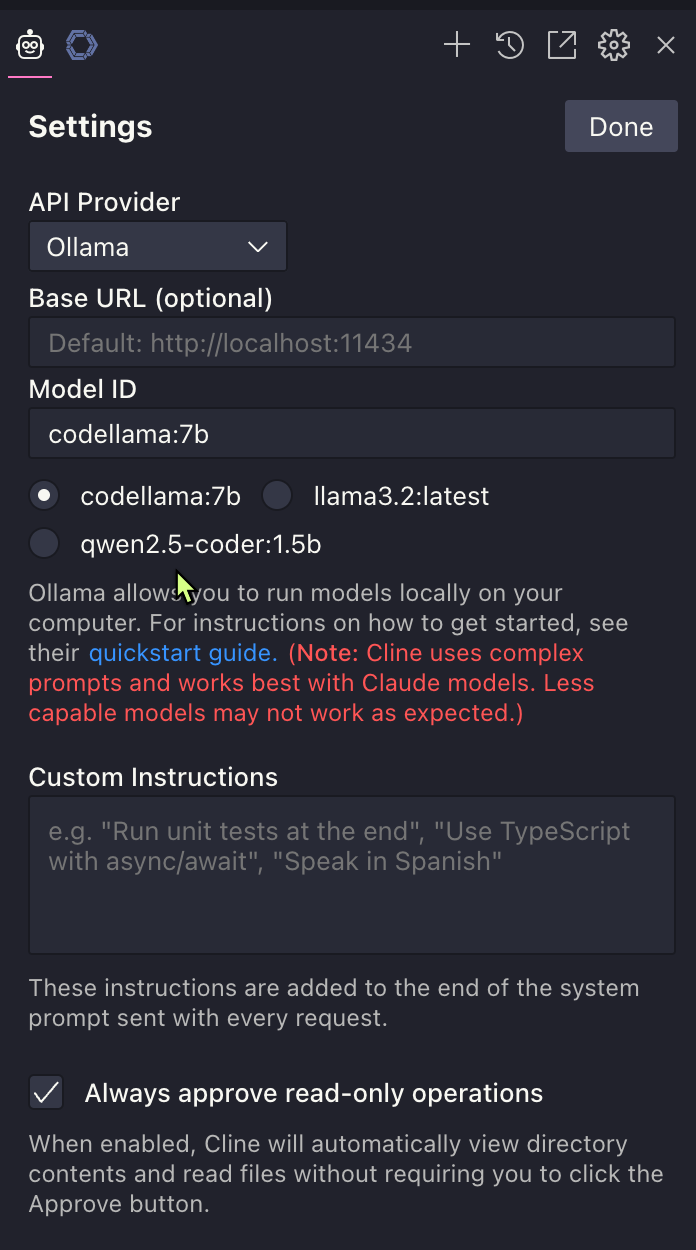

- Select the Cline icon from the extensions tab and configure it using the gear icon. Select Ollama as the API provider and pick one of your installed models. You can also customize the prompt here with your personal requirements and allow it to execute read-only operations without confirmation (it will still ask for permission to write to your files every time).

- It will work but you may get mixed results using a local model to generate complex solutions via prompt only, Antrophic's Claude Dev excels in generating and modifying multi file projects based on simple prompts.

- You can try it by opening a blank solution and prompting Cline to create a piece of code for you. The following video shows it creating a Python based API for CRUD operations on user names and email addresses using FastAPI.

The following video shows a recording of Cline acting on the prompt to create a Python API app using FastAPI that lets users Create, Read, Update and Delete records containing name and email information and store it to a JSON database.

Cline acting on the prompt to create a Python API app using FastAPI that lets users Create, Read, Update and Delete records containing name and email information and store it to a JSON database.

With these tools, you are on par with the Cursor IDE but with more control, for free and on the latest build of VS Code, not a fork created by Cursor.

Combining Models for Maximum Efficiency

- Use local models for tasks like auto-completion to avoid relying on paid API calls.

- For example, Qwen 2.5 (1.5b model) is a great choice for a fast, local machine learning model for coding.

- Use paid, online models for complex tasks such as generating a solution from scratch using prompts in Cline.

Cost Efficiency

- With tools like Claude Dev, tasks such as generating 200 lines of code cost only $0.07 and Anthropic charges you per request for exactly the amount of compute used. No subscription required.

- Local models eliminate costs altogether, making them a scalable option.

Privacy and Control

- Local tools ensure that your data isn’t shared with third parties for training their AI models.

- Open-source tools are transparent, allowing you to audit and modify their behavior.

Performance Optimization

- Use prompt caching with tools like Claude Dev to minimize API costs further.

- Experiment with lightweight models such as Gemini Flash to balance cost and performance.

Final Thoughts

The combination of VS Code, Continue Dev, and Claude provides a powerful, cost-effective alternative to Cursor. It empowers developers with customizable, AI-enhanced coding features while maintaining privacy and reducing expenses. This DIY approach proves that with a bit of configuration, you can achieve everything Cursor offers and more—at a fraction of the cost.

Thanks for reading! 😄