DeepSeek’s Open-Source Models: A Technical Deep Dive

In the rapidly evolving landscape of large language models (LLMs), DeepSeek’s DeepSeek-v3 and DeepSeek-r1 stand out as exciting open-source alternatives to well-known proprietary offerings like ChatGPT’s GPT-4 and the earlier "o1" family. Both DeepSeek models can be run locally using Ollama—which is excellent news for individuals or organizations seeking full control over their LLMs and the data they process.

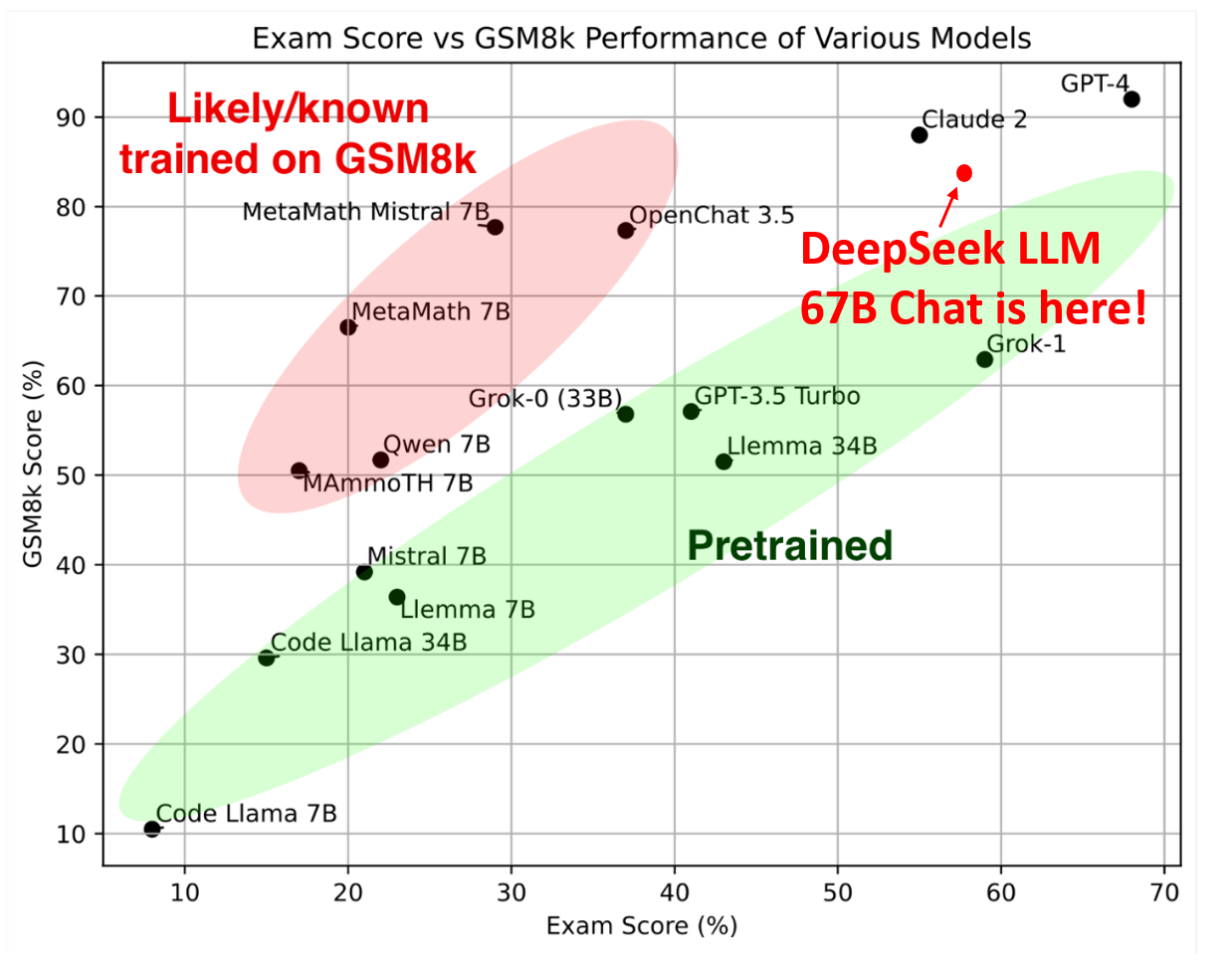

Hungarian National High-School Exam: In line with Grok-1, DeepSeek has evaluated their initial LLM model's mathematical capabilities using the Hungarian National High School Exam. This exam comprises 33 problems, and the model's scores are determined through human annotation. They follow the scoring metric in the solution.pdf to evaluate all models.

Overview of DeepSeek-v3 and DeepSeek-r1

DeepSeek-v3

DeepSeek-v3 is the flagship model in DeepSeek’s portfolio, focusing on large-scale natural language understanding and generation. It features:

- Parameter Count: Comparable to GPT-4 in size, with tens of billions of parameters.

- Architecture: Utilizes a transformer-based architecture, optimized for multitask learning and extended context windows.

- Training Data: Trained on a massive, diverse corpus (similar in scope to GPT-4’s training set), covering general text, technical documents, code snippets, and more.

- Performance: Aims to achieve near state-of-the-art performance in general question answering, text summarization, and creative writing.

From the DeepSeek-V3 GitHub repo, you can find:

- Model Weights & Checkpoints: Hosted within the repository or linked to external storage for easy access.

- Setup Instructions: Guidance on installing required dependencies, including Python libraries and GPU drivers.

- Usage Examples: Sample Python scripts or Jupyter notebooks illustrating how to load the model, perform inference, and fine-tune for specialized domains.

- Docker & CI/CD: Containerized environments or continuous integration workflows for reproducible deployments and testing.

- Community Contributions & Issues: Actively managed pull requests, bug reports, and feature discussions.

DeepSeek-r1

DeepSeek-r1 is a smaller sibling of DeepSeek-v3, developed for specialized tasks and resource-constrained environments. Key features include:

- Parameter Count: Significantly fewer parameters than DeepSeek-v3, offering faster inference on commodity hardware.

- Domain-Specific Optimization: Fine-tuned for domain-specific tasks such as enterprise document understanding, sentiment analysis, or structured data extraction.

- Reasoning Strength: Particularly optimized for logical reasoning and problem-solving in specialized domains.

- Efficiency: Requires less GPU memory, making it practical for local deployment on devices with limited compute resources.

From the DeepSeek-r1 GitHub repo, you can find:

- Fine-Tuning Scripts: Preconfigured scripts and guidance for customizing DeepSeek-r1 on domain-specific data.

- Recommended System Requirements: Suggested GPU/CPU configurations for optimal performance.

- Docker Environments: Container images for consistent deployments across different infrastructures.

- Transformer Integration: Step-by-step instructions for using DeepSeek-r1 with popular frameworks like Hugging Face Transformers.

- Community Support: Actively monitored issues and pull requests for troubleshooting, new feature requests, and general discussions.

How They Compare to ChatGPT’s GPT-4 and o1 Models

GPT-4

GPT-4 is a proprietary large-scale model from OpenAI. While it excels at a wide range of tasks, its closed-source nature means that it can only be accessed through APIs or hosted services. DeepSeek-v3 stands toe-to-toe with GPT-4 in certain benchmarks, especially in:

- Text Summarization: DeepSeek-v3 maintains high fidelity and accuracy.

- Conversational AI: Offers context retention across multiple dialogue turns.

- Reasoning: Can handle logical and analytical tasks well, though GPT-4 still has a slight edge in complex reasoning scenarios.

ChatGPT o1

The "o1" series from ChatGPT offered earlier or alternate releases. Its main strength—like DeepSeek-r1—is superior reasoning on certain tasks, particularly in contexts where consistent logic and inference across longer text segments are required. DeepSeek-r1 frequently outperforms ChatGPT o1 in niche scenarios or specialized workflows, thanks to its efficient training approach. This makes it more suitable for local deployments on limited hardware without sacrificing the quality of logical inference.

Open Source Licensing

One of the biggest advantages of DeepSeek models is their open-source licensing. This provides transparency and trust—users can scrutinize the models, training data, and weights. This approach encourages:

- Community Contributions: Developers can propose improvements, plug in new training data, and create domain-specific variants.

- Rapid Iteration: When an issue is identified or a new technique emerges, the open-source community can rapidly implement and share changes.

- Data Privacy: Running the model locally ensures sensitive data never leaves your environment.

Running DeepSeek Locally with Ollama

Ollama is a CLI tool that makes running open-source LLMs on local machines straightforward. Key benefits include:

- Seamless Setup: Install Ollama, download DeepSeek-v3 or DeepSeek-r1 model files, and start prompting with minimal friction.

- GPU/CPU Support: Leverages GPU when available or defaults to CPU for smaller models like DeepSeek-r1.

- Model Management: Easy switching between different local models and versions.

- Performance Optimizations: Built-in quantization and optional half-precision support to reduce memory usage.

Below is a quick start example:

# Download and install Ollama

brew install ollama

# Pull the DeepSeek-r1 model

ollama pull deepseek-r1

# Run DeepSeek-r1 with local inference

ollama run deepseek-r1This simple workflow illustrates how accessible and flexible local LLM deployment can be with Ollama.

Practical Use Cases

- Enterprise Solutions: For businesses that handle proprietary or sensitive data, local deployment ensures compliance with internal policies.

- Academic Research: Researchers benefit from full transparency in model architecture and weights, facilitating reproducible studies.

- Edge & IoT: DeepSeek-r1’s smaller memory footprint and strong reasoning capabilities enable advanced language tasks on constrained devices.

Conclusion

DeepSeek’s DeepSeek-v3 and DeepSeek-r1 models offer competitive performance and open-source flexibility in a market dominated by proprietary solutions like ChatGPT’s GPT-4 and o1. Whether you need a large-scale solution or a more specialized, efficient model, DeepSeek’s offerings—run locally via Ollama—provide a robust path to building, experimenting with, and deploying high-performing language models.

Key Takeaways:

- Open Source: Transparent and extensible.

- Local Deployment: Enhanced privacy and control.

- Versatility: Both a large-scale model (DeepSeek-v3) and an efficient variant (DeepSeek-r1) are available.

- Reasoning Focus: DeepSeek-r1 and ChatGPT o1 excel in logical and analytical tasks.

- Competitive: On par with top-tier models for many use cases.

If you value the ability to fine-tune, audit, and innovate on your language model without relying on third-party services, DeepSeek’s models present a strong open-source option. Combined with tools like Ollama, they bring next-generation language modeling right to your local environment.