Integrating Model Context Protocol (MCP) with the OpenAI Agents SDK

The OpenAI Agents SDK now incorporates support for the Model Context Protocol (MCP), an open-standard protocol designed to facilitate efficient integration between external tools, data resources, and Large Language Models (LLMs). Through MCP, developers can significantly expand agent functionality, enabling richer, more context-sensitive interactions within AI-driven applications.

Conceptual Overview of MCP

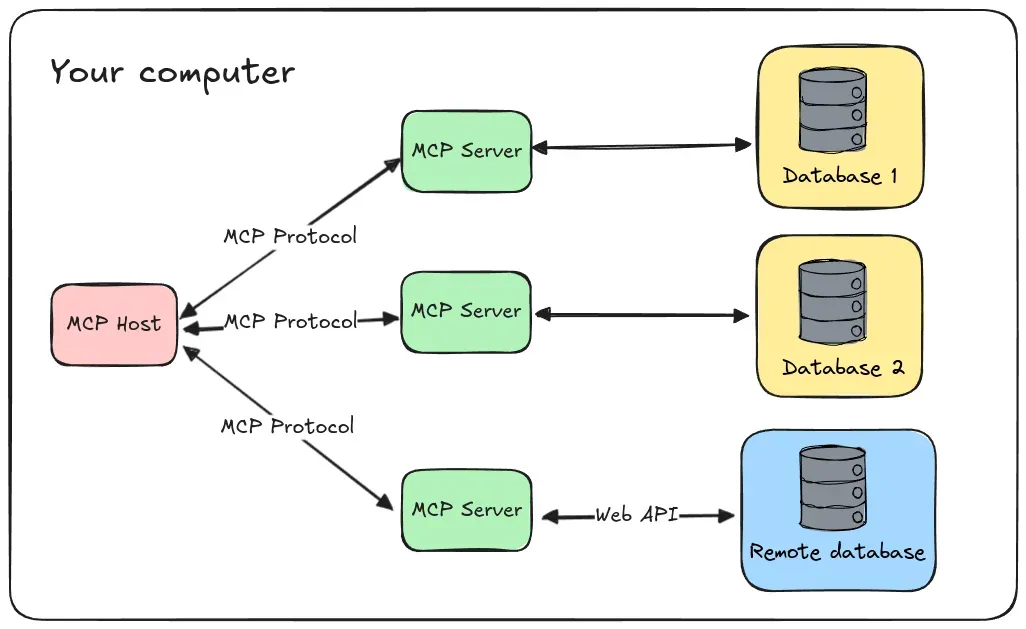

MCP functions as a standardized interface, effectively acting as a universal conduit facilitating the bidirectional exchange between LLMs and external resources. The protocol delineates two principal categories of servers predicated on their modes of communication:

- Stdio Servers: Operating as subprocesses within a local application context, these servers enable efficient internal data exchanges.

- HTTP over Server-Sent Events (SSE) Servers: These are remote servers accessed over network connections established via specific URLs, suitable for distributed or cloud-based environments.

The OpenAI Agents SDK includes dedicated classes to interact with both categories:

MCPServerStdio: Responsible for handling local stdio server processes.MCPServerSse: Manages interactions with remote SSE-based servers.

Integration and Operational Dynamics of MCP Servers

The integration of MCP servers within agent environments involves a systematic and straightforward procedure. The SDK autonomously executes the list_tools() method against each registered MCP server during runtime, thereby providing the LLM with an up-to-date registry of accessible tools. Execution calls to individual tools are managed through the call_tool() method, delegating operational tasks appropriately to the specified MCP server.

An example configuration is provided below:

from agents import Agent, MCPServerStdio, MCPServerSse

# Initialize MCP servers

mcp_server_1 = MCPServerStdio(params={"command": "npx", "args": ["-y", "@modelcontextprotocol/server-filesystem", "/path/to/dir"]})

mcp_server_2 = MCPServerSse(url="https://example.com/mcp-server")

# Agent configuration incorporating MCP servers

agent = Agent(

name="Assistant",

instructions="Employ provided tools to fulfill assigned tasks.",

mcp_servers=[mcp_server_1, mcp_server_2]

)This configuration allows agents to exploit toolsets and resources managed through MCP servers, substantially improving the agent’s versatility and responsiveness to complex tasks.

Performance Enhancement through Toolset Caching

To address latency issues, particularly prevalent when utilizing remote MCP servers, the SDK offers a caching mechanism for the tool registry. This caching can be enabled by setting the cache_tools_list parameter to True during server initialization, thereby minimizing repetitive execution of the list_tools() method.

# Initialize an MCP server with enabled caching

mcp_server = MCPServerSse(url="https://example.com/mcp-server", cache_tools_list=True)Caching should be selectively applied, recommended primarily when dealing with static toolsets. Dynamic tool registries necessitate periodic cache invalidation using the invalidate_tools_cache() method to maintain tool list accuracy.

Detailed Examples and Diagnostic Tracing

For comprehensive implementation strategies and practical examples of MCP integration, developers are encouraged to consult the following resources:

The SDK further includes advanced tracing capabilities, automatically capturing and recording detailed operational interactions with MCP servers, such as:

- Invocation processes for tool enumeration.

- Comprehensive logging of MCP-specific function executions.

This diagnostic functionality is essential for debugging, performance analysis, and ensuring robust interaction monitoring between agent applications and their associated MCP server environments.

By leveraging MCP integration within the OpenAI Agents SDK, developers can effectively create advanced AI applications characterized by enhanced flexibility, superior contextual awareness, and seamless integration capabilities with diverse external tools and resources.