Phi-4: A 14B Parameter Open Model for Accelerated Research and Innovation now on Llama

Meet Phi-4—a 14B parameter open model which, in many scenarios, can go head-to-head with Microsoft’s GPT-4o-mini from OpenAI. Even better? You can run it directly on Ollama using:

ollama run phi4

In this post, I’ll dive into what makes Phi-4 special, its primary use cases, and how you can leverage it in your own projects. Ready? Let’s get started!

1. A Quick Overview of Phi-4

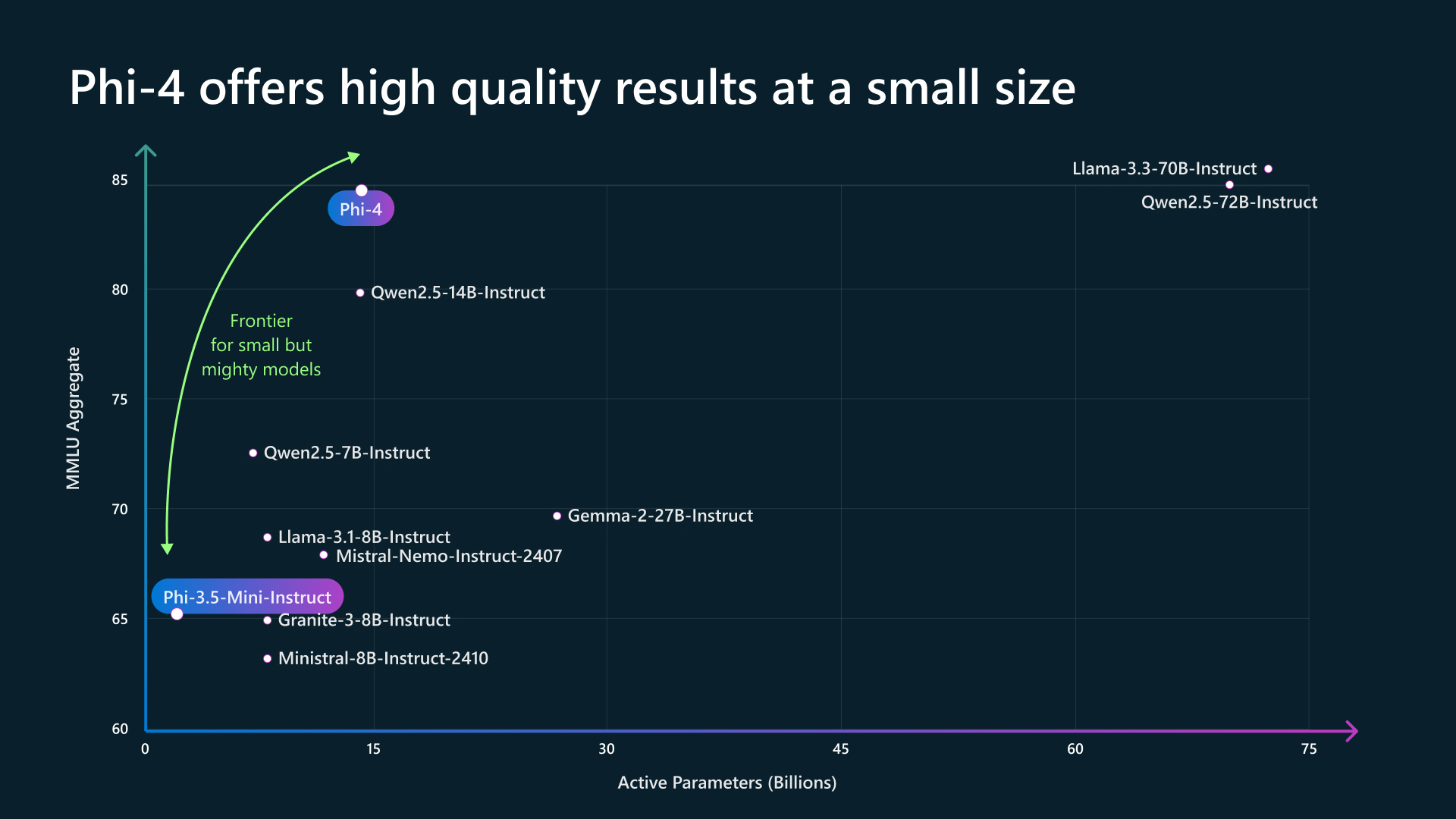

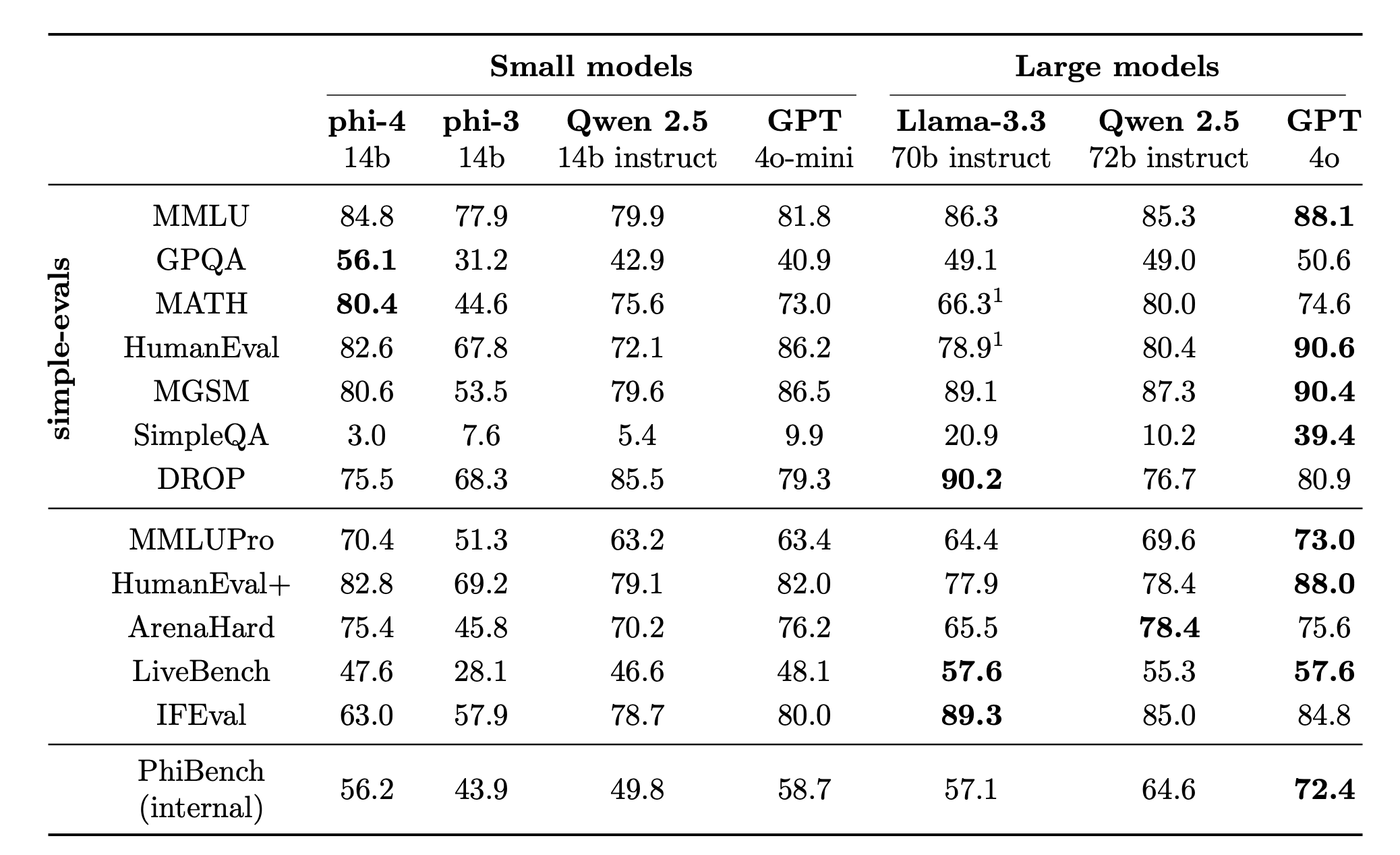

Phi-4 is a transformer-based language model with 14 billion parameters, designed for research and practical applications in natural language processing. While large language models are all the rage, Phi-4 stands out by offering competitive performance at a fraction of the size compared to some of the biggest heavyweights in the AI space.

Key Highlights

- Performance: Phi-4 competes with GPT-4o-mini from Microsoft.

- Size: With 14B parameters, Phi-4 hits the sweet spot between performance and manageable resource usage.

- Availability: It’s, ahm, fully open and accessible through Ollama. This ensures easy integration into your workflows, no complicated setup needed.

2. Under the Hood: Technical Foundations

So, what makes Phi-4 tick? Well, at a high level, it uses a transformer architecture—the same revolutionary framework powering modern NLP. Below are some core aspects of its technical design:

- Pre-Training Regimen: Phi-4 was pre-trained on an extensive corpus of text, covering diverse domains like news articles, scientific papers, books, and web content. This broad-based training helps the model understand a wide range of topics.

- Parameter Efficiency: Despite boasting 14B parameters, Phi-4’s architecture is optimized to maintain high inference speed without demanding absurdly large GPU memory.

- Fine-Tuning Customizability: Developers can fine-tune Phi-4 using domain-specific data, giving you a specialized language model for tasks like summarization, code generation, or question answering.

3. Primary Use Cases

a) Memory/Compute Constrained Environments

“Uh, but how can a 14B parameter model work in memory-limited settings?” you might ask. Great question! Phi-4’s model weights can be quantized or optimized for inference, making it more feasible to run on GPUs or systems with smaller VRAM footprints. This means you can experiment on your local development machine or modest cloud setups without breaking the bank.

b) Latency-Bound Scenarios

Because Phi-4 has a relatively lean footprint (compared to gargantuan 100B+ parameter models), you can achieve faster inference and lower latency. This is particularly important for real-time applications—like chatbots, voice assistants, or interactive web services—where speed is a high priority.

c) Reasoning and Logic

Phi-4 isn’t just about spitting out text; it’s designed to tackle reasoning and logic-based tasks effectively. Whether you’re building an AI tutor system, a logical puzzle solver, or advanced data analysis tools, Phi-4’s training and architecture help it produce more accurate, context-aware responses.

4. How to Get Started with Phi-4 on Ollama

If you’re new to Ollama, it’s a lightweight framework that simplifies the process of running large language models locally. Here’s a quick start guide:

- Install Ollama: Head over to Ollama’s official website and follow the instructions for your operating system.

- Pull Phi-4: Ollama automatically pulls the Phi-4 model the first time you run it.

Run the Model: Open your terminal or command prompt, then type:

ollama run phi4

That’s it! You’re now ready to interact with the model and, uh, see just how powerful it can be.

5. Potential Applications and Projects

Curious how you might leverage Phi-4 in your work? Here are just a few ideas:

- Interactive Chatbots: Build advanced conversational agents that can hold context for longer and reason about user inputs.

- Rapid Prototyping: If you’re an AI researcher or developer, Phi-4 provides a fast testbed for new ideas, algorithms, or architecture tweaks.

- Generative AI Features: Add new generative features like text summarization, blog writing, or code completion into your existing product lineup.

- Educational Tools: Create AI-driven tutoring systems or question-answering platforms that help users grasp complex topics.

6. Roadmap and Future Enhancements

The open-source nature of Phi-4 means the community can directly contribute to improvements in:

- Model Optimization: Better quantization strategies, more efficient fine-tuning methods, or cutting-edge inference techniques.

- Extended Use Cases: Incorporating multilingual support or specialized domain knowledge (e.g., biomedical, legal, or financial data).

- Ecosystem Expansion: Tools, plugins, or wrappers that streamline the integration of Phi-4 into different software stacks.

7. Conclusion

Phi-4 might not be the biggest language model out there, but its 14B parameters strike an excellent balance between performance and resource efficiency. It’s open, community-driven, and aligned with modern development workflows. Whether you’re tackling research experiments, real-world enterprise applications, or just geeking out with the latest NLP tech, Phi-4 offers a flexible, high-performance solution that won’t break the bank—or your GPU.

So, if you’re looking for a powerful yet practical model to accelerate your AI projects, give Phi-4 a try on Ollama. We can’t wait to see what you build next!

Have questions or want to share your experience? Drop a comment, and let’s keep the conversation going. Happy building, folks!